Welcome to SIA Copper Line - Official Website

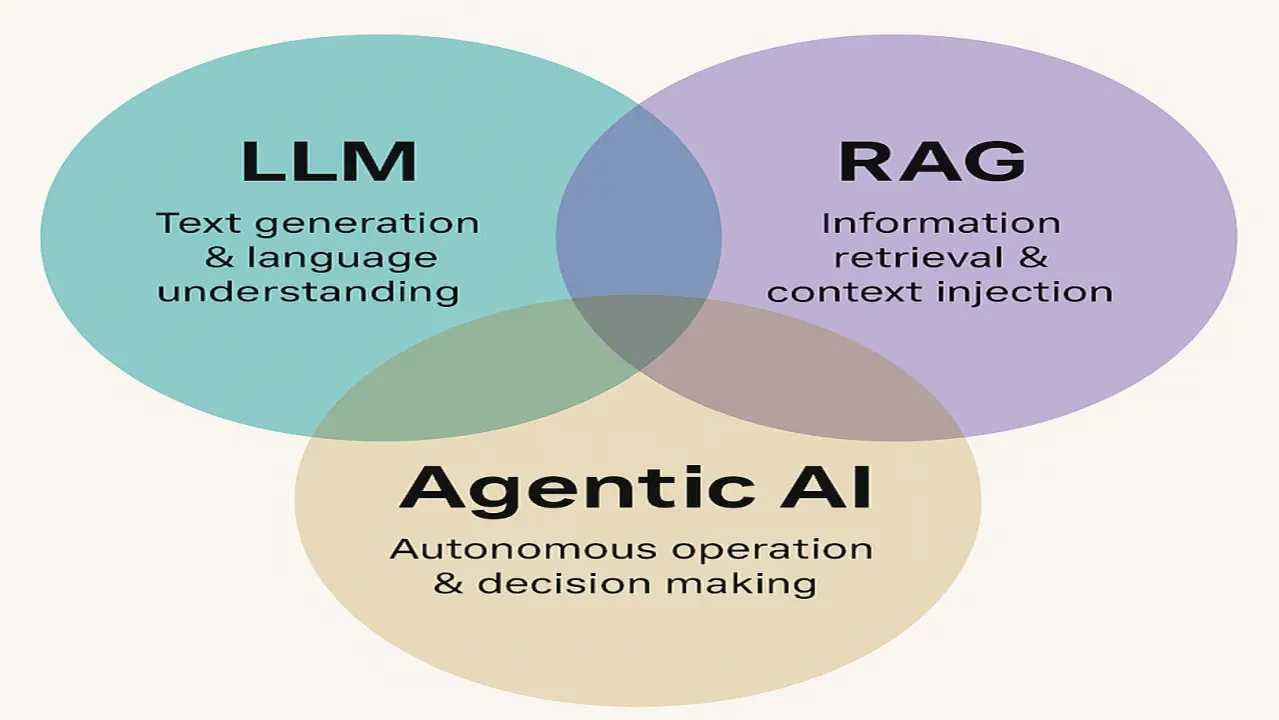

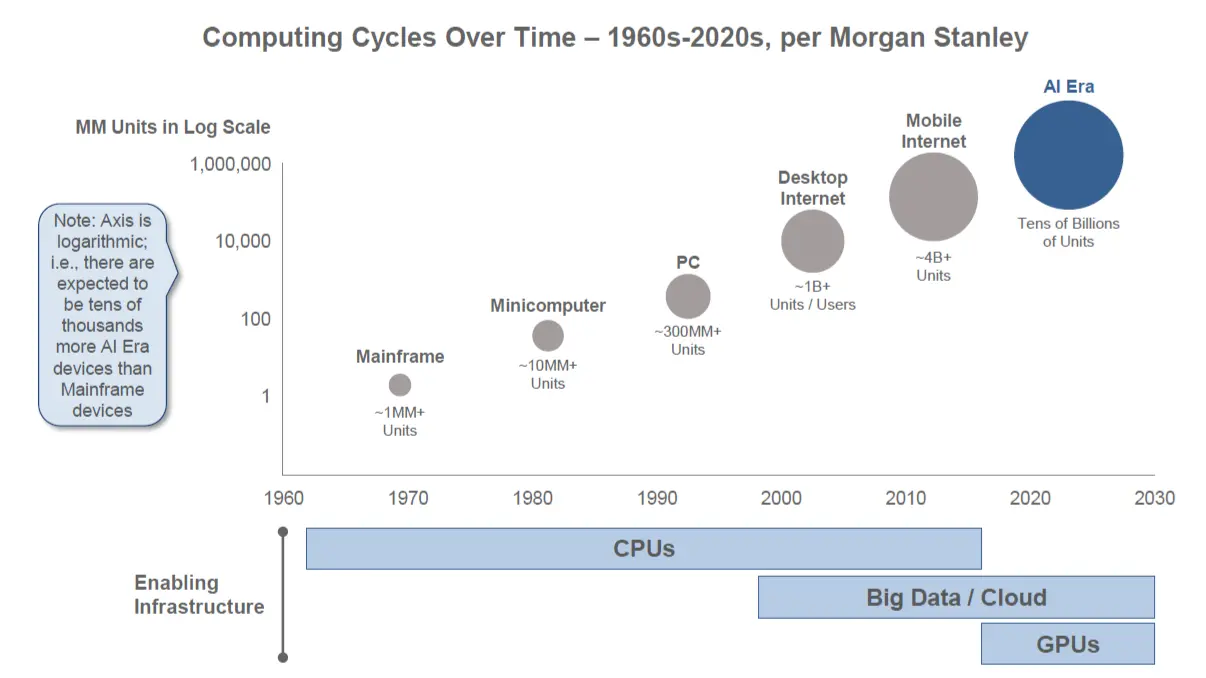

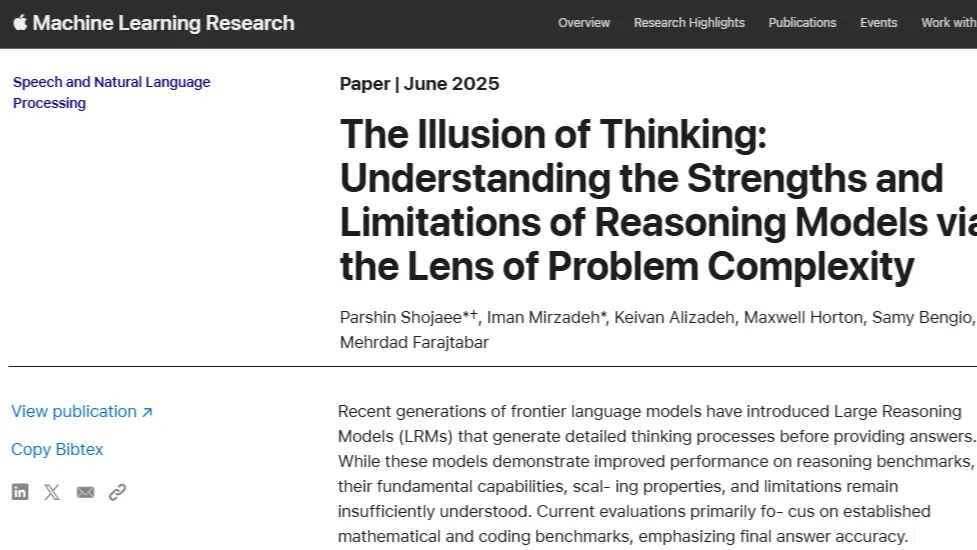

Our focus is on affordable automation through AI-based agents, daily performance monitoring, and real-time business alerts that keep you informed of your business health at all times.

Our platform integrates with Airtable to track daily operational data compared to monthly plans. We generate visual dashboards and send automated alerts via WhatsApp for early detection of stock issues, sales drops, or production failures.

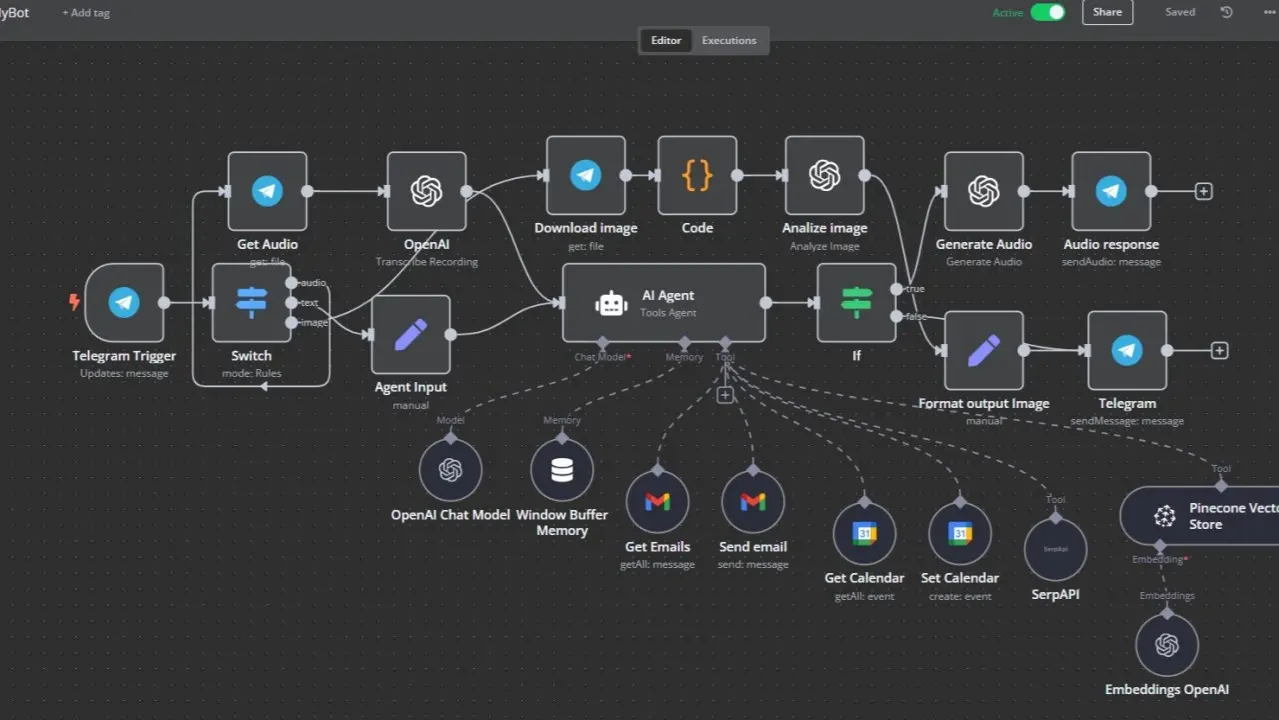

The entire logic is built with n8n, enabling end-to-end data automation. Business owners can remotely control operations through clear daily metrics.

Our founder holds a Ph.D. in Economics focused on automated production management systems, and an EMBA from Stockholm School of Economics with a thesis on Automation of managerial control for quick-service restaurant chains.

Read our LinkedIn articles:

Privacy Policy

Last updated: 20.04.2025

This Privacy Policy describes how SIA Copper Line collects, uses, and shares information when you use our services, including our WhatsApp Business integration.

Information We Collect

We may collect the following information:

- Contact information (name, email address, phone number)

- Business information related to your use of our services

- Communication data when you interact with our services

- Technical data such as device information and usage statistics

How We Use Your Information

- To provide and improve our services

- To personalize your experience

- To send notifications and alerts related to your business operations

- To comply with legal obligations under EU GDPR

Data Sharing

We may share your information with:

- Service providers who help us deliver our services (e.g., Airtable, WhatsApp)

- Legal authorities when required by law

We do not sell your personal information to third parties.

Data Security

We implement appropriate technical and organizational measures to protect your personal data against unauthorized access, alteration, disclosure, or destruction.

Data Retention

We retain your data only as long as necessary to provide the service or as required by law.

Your Rights

Under the GDPR, you have the right to:

- Access your personal data

- Rectify inaccurate data

- Request deletion of your data

- Restrict or object to processing

- Data portability

To exercise these rights, please contact us at info@copperline.info.

Contact

If you have questions about this Privacy Policy, please email us at info@copperline.info.

Terms of Service

Last updated: 20.04.2025

By using SIA Copper Line services, you agree to the following terms:

1. Service Description

SIA Copper Line provides intelligent agent-based solutions that automate routine business operations and implement management control tools for small enterprises. Our services include integration with Airtable and WhatsApp for business monitoring and alerts.

2. User Responsibilities

You agree to:

- Provide accurate information when using our services

- Maintain the confidentiality of your account credentials

- Comply with all applicable laws and regulations

3. Intellectual Property

All content and technology provided by SIA Copper Line are protected by intellectual property laws. You may not copy, modify, or distribute our technology without permission.

4. Limitation of Liability

SIA Copper Line shall not be liable for any indirect, incidental, or consequential damages arising from the use of our services.

Contact Us

SIA COPPER LINE

VAT number EU: LV50203082681

LEI number: 2549002OO19GM8GJZG60

Email: info@copperline.info

Phone: +(371) 22030215

Address: Latvia, Jurmala, Rigas iela 49-28